A Brief History of Netflix Personalization (Part One, from 1998 to 2006)

An inside look at Netflix's twenty-year journey to help connect members with movies they'll love. This is Part 1, from Netflix's launch in 1998 to 2006.

Gib’s note: Welcome to the 200 new members who joined since my last essay! After five months, we’re 5,400 strong. In each essay, I draw from my experience as both VP of Product at Netflix and Chief Product Officer at Chegg to help product leaders build their careers. This is essay #50.

Here are my upcoming public events and special offers:

Click here to purchase my self-paced Product Strategy course on Teachable for $200 off the normal $699 price — the coupon code is 200DISCOUNTGIBFRIENDS but the link will handle this automatically. (You can try the first two modules for free.)

I’m on Instagram at “AskGib”— fewer words, more pictures!

5/26 “Netflix’s 2021 Product Strategy” with ProductTank Austria (9 am PT)

5/27 “Hacking Your Product Leader Career” MasterClass with Productized

I’m enjoying UserLeap, my new survey tool. You can learn more about the product here.

Click here to ask and upvote questions. I answer a few each week.

A Brief History of Netflix Personalization

Part 1: From startup to 2006

Netflix began as a DVD-by-mail startup, following the invention of the DVD player in 1996. In 1998 Netflix launched its website with less than 1,000 DVDs. Here’s what the site looked like its first few years:

In 1999, Netflix had 2,600 DVDs to choose from but intended to grow its library to 100,000 titles. To make it easier for members to find movies, Netflix developed a personalized merchandising system. Initially, it focused on DVDs, but in 2007, Netflix launched streaming, which used the same personalization system.

In twenty years, Netflix has gone from members choosing 2% of the movies the merchandising system suggests to 80% today. And the system also saves members’ time. In the early days, a member would explore hundreds of titles before finding something they liked. Today most members look at forty choices before they hit the “play” button. Twenty years from now, Netflix hopes to play that one choice that’s “just right” with no browsing required.

Below, I detail Netflix’s progress from the launch of Cinematch in 2000 to 2006. It’s a messy journey, with an evolving personalization strategy propelled by Netflix’s ability to execute high-cadence experiments using its home-grown A/B test system.

2000: Cinematch

Netflix introduced a personalized movie recommendation system, using member ratings to predict how much a member would like a movie. The algorithm was called Cinematch, and it’s a collaborative filtering algorithm.

Here’s an easy way to understand collaborative filtering: Imagine I like “Batman Begins” and “Breaking Bad,” and you like both, too. Because I like “Casino,” the algorithm suggests you’ll like “Casino.” Now, apply this approach across millions of members and titles.

2001: The Five-Star Rating System

Netflix created a five-star rating system and eventually collected billions of ratings from its members. Netflix experimented with multiple “star bars,” at times stacking the stars to indicate expected rating, average rating, and friends’ rating. (It was a mess.)

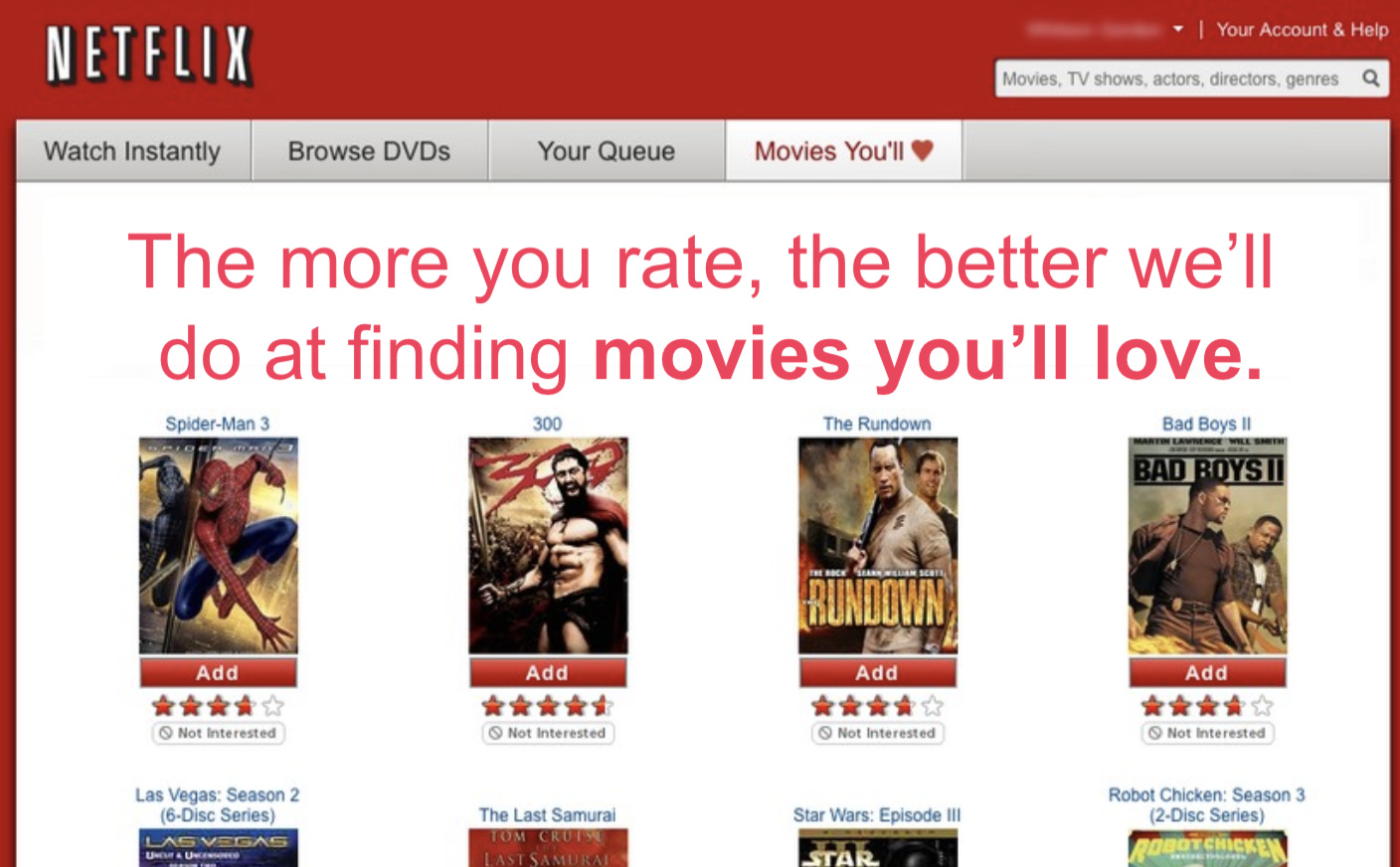

The stars represented how much a member would like a movie. If a member had watched the movie already, they could rate it. The red “Add” button added the movie to a member’s queue.

2002: Multiple Algorithms

Beyond Cinematch, three other algorithms worked together to merchandise movies:

Dynamic store. This algorithm indicated if the DVD was available to merchandise. Late in the DVD era, the algorithm also determined if a DVD was available in a member’s local hub. (By 2008, Netflix only merchandised titles that were available locally to increase the likelihood of next-day DVD delivery.)

Metasims. Included all movie data available for each title— plot synopsis, director, actors, year, awards, language, etc.

Search. There was little investment in search in the early days as Netflix assumed members searched for expensive new release DVDs. But the team discovered that the titles members chose included lots of older, less expensive, long-tail titles, so they ramped up investment in search.

In time, Netflix blended lots of other algorithms to execute its personalized merchandising system.

2004: Profiles

Recognizing multiple family members used a shared account, Netflix launched “Profiles.” This feature enabled each family member to generate its own movie list. It was a highly requested feature, but only two percent of members used it despite aggressive promotion. It was a lot of work to manage an ordered list of DVDs, and only one person in each household was willing to do this.

Given the low adoption, Netflix announced its plan to kill the feature but capitulated in the face of member backlash. A small set of users cared deeply about the feature— they were afraid that losing Profiles would ruin their marriages! As an example of “all members are not created equal,” half the Netflix board used the feature.

2004: Netflix launches “Friends.”

The hypothesis: if you create a network of friends within Netflix, they’ll suggest great movie ideas to each other and won’t quit the service because they don’t want to leave their friends. At launch, 2% of Netflix members connected with at least one friend, but this metric never moved beyond 5%.

Netflix killed the feature in 2010 as part of its discipline of “scraping barnacles” — removing features that members didn’t value. Unlike Profiles, there was no user revolt.

Two insights on social in the context of movies:

Your friends have bad taste

You don’t want your friends to know all the movies you’re watching.

These are surprising insights during a decade when Facebook successfully applied social to many product categories.

2006: The Netflix Personalization Strategy

Here’s what the personalization strategy looked like in 2006:

The intent was to gather explicit and implicit data, then use various algorithms and presentation tactics to connect members with movies they’d love. The team focused on four main strategies:

Gather explicit taste data, including movie and TV show ratings, genre ratings, and demographic data.

Explore implicit taste data like what DVDs members added to their movie list, or later, which movies members streamed.

Create algorithms and presentation layer tactics to connect members with movies they’ll love. Use the explicit/implicit taste data, along with lots of data about movies and TV shows (ratings, genres, synopsis, lead actors, directors, etc.), to create algorithms that connect members with titles. Also, create a UI that provides visual support for personalized choices.

Improve average movie ratings for each member by connecting them with better movies and TV shows.

The high-level hypothesis: personalization would improve retention by making it easy for members to find movies they’ll love.

The high-level engagement metric was retention. However, it takes years to affect this metric. So Netflix had a more sensitive, short-term proxy metric: The percentage of members who rated at least 50 movies during their first two months with the service.

The theory: members would rate lots of movies to get better recommendations. Many ratings from a member signaled they appreciated the personalized recommendations they received in return for their ratings.

Here’s a rough snapshot of the improvement in this proxy metric over time:

It took Netflix more than a decade to demonstrate that a personalized experience improved retention. But consistent growth in this proxy convinced the company to keep doubling down on personalization.

Why the 2011 dip in the metric? By this time, most members streamed movies, and Netflix had a strong implicit signal about member taste. Once you hit the “Play” button, you either kept watching or stopped. Netflix no longer needed to collect as many star ratings.

2006: The Ratings Wizard

The original petri dish for personalization was an area on the site with a “Recommendations” tab. But testing revealed that members preferred less prescriptive language. The new tab had a “Movies You’ll Heart” tab that generated lots more clicks. The design team thought the tab was “fugly,” but it worked.

When members arrived at the “Movies You’ll Heart” area, the site introduced them to the “Ratings Wizard”:

Members “binge-rated” while they waited for their DVDs to arrive. The Ratings Wizard was critical in moving the “percentage of members who rate at least 50 movies in their first two months” proxy metric.

2006: Demographic data

Netflix collected age and gender data from its members, but when the team used demographics to inform predictions about a member’s movie taste, the algorithms did not improve predictive power. Huh?

How did Netflix measure predictive power? The proxy metric for the personalization algorithms was RMSE (Root Mean Squared-Error) — a calculation that measures the delta between the algorithm’s predicted rating and a member’s actual rating. If Netflix predicted you would like “Friends” and “Seinfeld” four and five stars respectively, and you rated these shows four and five stars, the prediction was perfect. RMSE is a “down and to the right” metric that improved over time, mainly through improvements in the collaborative filtering algorithm:

Unfortunately, age and gender data did not improve predictions— it did not improve RMSE. Movie tastes are hard to predict as they are very idiosyncratic. Knowing my age and gender doesn’t help predict my movie tastes. It’s much more helpful to know just a few movies or TV shows I like.

To see this insight in action today, create a new profile on your Netflix account. Netflix asks you for a few titles you like to kickstart the personalization system. That’s all they need to seed the system.

2006: Collaborative Filtering in the QUACL

The QUACL is the Queue Add Confirmation Layer. Once a member added a title to their Queue, a confirmation layer would pop up suggesting similar titles. Below, a member has added “Eiken” to their movie list, and the collaborative filtering algorithm suggests six similar titles:

Over time, Netflix got better at suggesting similar titles for members to add to their queue, which drove this source from ten to fifteen percent of total queue adds. The QUACL was a great test environment for algorithm testing. In fact, Netflix executed its first machine learning tests within the QUACL.

2006: The $1M Netflix Prize

Like any startup, Netflix had limited resources. They had proven the value of “Cinematch” but had only one engineer focused on the algorithm. The solution: outsource the problem via The Netflix Prize.

Netflix offered a million-dollar prize to any team that improved RMSE for their “Cinematch” algorithm by ten percent. Netflix provided anonymized data from their members as “training data” for the teams, along with a second dataset that included members’ actual ratings so teams could test their algorithms’ predictive power.

If you’d like to read more, my next “Ask Gib” essay describes Netflix personalization from 2007 to today. You’ll see how Netflix’s strategy evolved, how their appetite for risk-taking increased, and finally, how their pace of experimentation accelerated to produce step-function innovations.

Click here to read Part 2 of a “Brief History of Netflix Personalization.”

One last thing!

I hope you found this essay helpful. Before you go, I’d love you to complete the Four Ss below:

1) Subscribe now, if you’re not a member of “Ask Gib” already:

2) Share this essay with others! By doing this, we’ll collect more questions and upvotes to ensure more relevant essays:

3) Star this essay! Click the Heart icon near the top or bottom of this essay. (Yes, I know it’s a stretch to say “star” and not “heart,” but I need an “S” word.)

4) Survey it! It only takes one minute to complete the UserLeap survey for this essay, and your feedback helps me to make each essay better: Click here.

Many thanks,

Gib

PS. Got a question? Ask and upvote questions here:

PPS. Once I answer a question, I archive it. I have responded to 49 questions so far!

Hello Gibson,

I was trying to vote in your NPS system but I got a 502 error.

Thank you for all your Product strategy content.

502 ERROR

The request could not be satisfied.

CloudFront wasn't able to connect to the origin. We can't connect to the server for this app or website at this time. There might be too much traffic or a configuration error. Try again later, or contact the app or website owner.

If you provide content to customers through CloudFront, you can find steps to troubleshoot and help prevent this error by reviewing the CloudFront documentation.

Generated by cloudfront (CloudFront)

Request ID: CaxP1DvDgXz27OxuYe_dB9loF2bypHH2y2J8SacdooDP54oxHbuzrw==