A Brief History of Netflix Personalization (Part Two, from 2007 to 2021).

From the launch of streaming to an "I feel lucky" button, this essay details Netflix’s journey to enable a highly personalized experience that connects its members with movies they'll love.

Gib’s note: In each “Ask Gib” essay, I draw from my experience as both VP of Product at Netflix and Chief Product Officer at Chegg to help product leaders advance their careers. This is essay #51.

Here are my upcoming public events and special offers:

Click here to purchase my self-paced Product Strategy course on Teachable for $200 off the normal $699 price — the coupon code is 200DISCOUNTGIBFRIENDS but the link will handle this automatically. (You can try the first two modules for free.)

I’m on Instagram at “AskGib”— fewer words, more pictures!

I’m enjoying my new survey tool, UserLeap. Learn more about the product here.

Click here to ask and upvote questions. I answer a few questions each week.

A Brief History of Netflix Personalization, Part Two

From 2007 to 2021

(If you haven’t read Part 1 (1998-2006), you can read it here.)

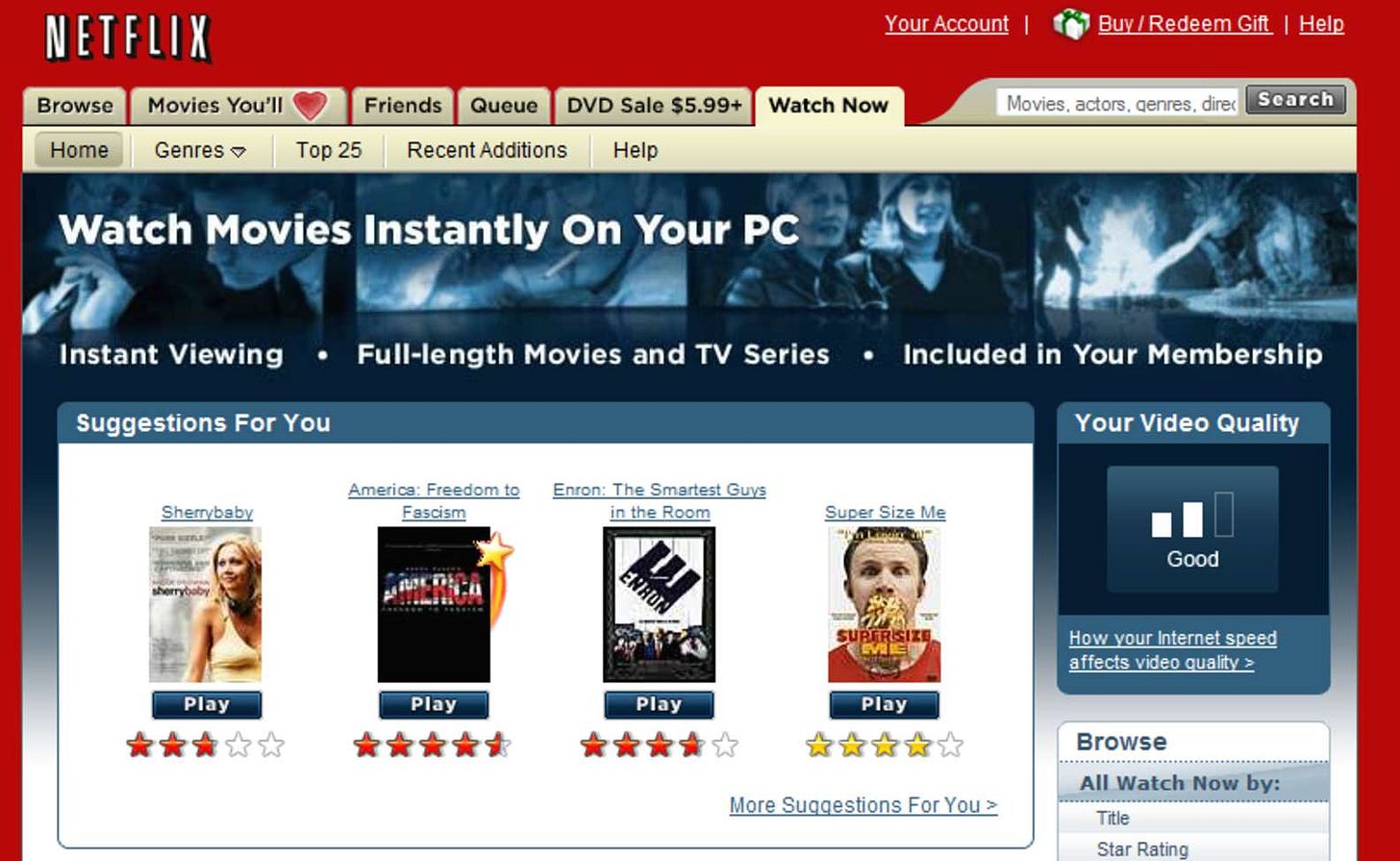

2007: Netflix streaming launch

Netflix launched streaming in January 2007. For the first time, the Netflix team had real-time data about what movies members watched, where before, they only had DVD rental activity. Over time, this implicit data became more important in predicting members’ movie tastes than the explicit data Netflix collected via its five-star rating system.

At the time, Netflix had nearly 100,000 DVDs to choose from, so the DVD merchandising challenge was to help members find “hidden gems” from its huge DVD library. However, with streaming, the challenge was to help members identify a few movies they’d find value among the three hundred mediocre titles available at launch.

2007: Netflix Prize

The “Netflix Prize” offered $1 million to any team that could improve the predictive power of Netflix’s collaborative filtering algorithm by 10%, as measured by RMSE, the delta between expected and actual rating for each movie. Two years later, “Bellkor’s Pragmatic Chaos” won the contest, beating 5,000 other teams.

There were two insights from the contest:

All ratings are not created equal. Contestants discovered that the ratings members provided for recent movies provided more predictive power than older ratings.

The more algorithms, the better. At the end of each year, Netflix paid a $50,000 progress prize to the leading team. On the final day of the year, the teams in second and third place combined their algorithms and vaulted to the top of the leaderboard to take the progress prize. That’s how teams learned the importance of combining lots of algorithms. (That’s also why the team names were so strange— when teams combined their work, they created “mashup” names.)

After two years, the top team delivered a 10.06% increase in RMSE to win the million-dollar prize. “BellKor's Pragmatic Chaos” submitted its winning algorithm twenty-four minutes before the second-place team, “The Ensemble.” The two teams had identical scores, but “Bellkor” submitted their entry first. Here’s the final leaderboard:

2009: The Next Big Netflix Prize

When Netflix announced the winners of its first competition, it launched a second round. The new challenge was to use demographics and rental behavior to make better predictions. The algorithms could consider a member’s age, gender, ZIP code, and full rental history.

When Netflix released anonymized customer information to start the second round, the Federal Trade Commission (FTC) stepped in with a lawsuit contending that the data wasn’t fully anonymized. As part of the March 2010 settlement for that suit, Netflix canceled the second round.

2010: Testing the New “Netflix Prize” Algorithm

Netflix launched the Netflix Prize to provide better movie choices for members, which the team hoped would translate into retention improvement. But when Netflix executed the new algorithm in a large-scale A/B test, there was no measurable retention difference. It was a disappointing result.

A new hypothesis emerged. To improve retention, you needed better algorithms plus presentation layer tactics that provided context for why Netflix chose a specific title for each member, as follows:

Better algorithms + UI/design support/context = improved retention.

Was it worth $1 million to execute the contest? Absolutely. The recruiting benefit alone made it worthwhile. Before the Netflix Prize, engineers considered Netflix just another e-commerce company. After the prize, they regarded Netflix as a highly innovative company.

2010: Popularity Matters

Netflix published all of its learnings from the Netflix Prize, and other companies studied the results. The music streaming service Pandora, whose personalization efforts focused on its “Music Genome Project,” was wary of weighting its algorithms with popularity. Instead, Pandora had 40 “musicologists” who tagged each song with hundreds of attributes to explain why a listener would like a song. For example, musicologists tagged Jack Johnson’s songs as “optimistic, folksy, acoustic music with surfing, outdoor adventure, and coming of age themes.”

But as Pandora evaluated the results of the Netflix Prize (which weighted popularity highly), they began to execute collaborative filtering algorithms, too. These changes improved Pandora’s listening metrics. Pandora’s conclusion: popularity matters.

2011: Netflix’s Movie Genome Project

While admiring Pandora’s work and knowing that “the more algorithms, the better,” Netflix began to develop its own “movie genome” project, hiring 30 “moviecologists” to tag various attributes of movies and TV shows.

As a reminder, Netflix’s collaborative filtering algorithm predicts you’ll like a movie, but the algorithm can’t provide context for why you’ll like it. The Cinematch collaborative filtering algorithm leads to statements like, “Because you like Batman Begins and Breaking Bad, we think you’ll like Sesame Street.” Wait, what?

Netflix’s new movie genome algorithm is called “Category Interest.” Now, for the first time, Netflix can suggest a movie and give context for why a member might like it. In the example below, Netflix knows I like Airplane, and Heathers, so suggests Ferris Bueller’s Day Off and The Breakfast Club because I like “Cult Comedies from the 1980s”:

The “Category Interest” algorithm improved Netflix’s watching metrics — the percentage of members who watched at least forty hours a month — but the team did not execute an A/B test to see if it improved retention.

2011: How the Personalization Algorithms Work

In simplest terms, Netflix creates a forced rank list of movies for each user — from the content most likely to please to the least. Then this list is filtered, sliced, and diced according to attributes of the movies, TV shows, and the member’s tastes. For instance, a filter teases out a sub-list of movies and presents it in a row called “Quirky dramas with strong female heroines” or “Witty, irreverent TV shows.” Other rows might be titled, “Because You Watched Stranger Things, We Think You’ll Enjoy…” or “Top 10 for You.”

Netflix’s personalization approach has three components:

A forced-rank list of titles for each member

An understanding of the most relevant filters for each member so the algorithms can present a subset of movies and TV shows from the list above, and

The ability to understand the most relevant rows for each member, depending on the platform, time of day, and lots of explicit/implicit movie taste data.

The beauty of this approach is that these rows, with appropriate context, can be displayed on any device or screen. It’s easy to display this same row structure on all browsers and devices.

2011: Netflix Proves Personalization Improved Retention

Finally, In 2011, Netflix demonstrated a retention improvement in a large A/B test. However, the results were controversial as the test compared the default personalized experience to a dumbed-down experience with no personalization. Netflix engineers complained the test was a waste of time. By this time, most of the team accepted that personalization created a better experience for Netflix members.

2012: Profiles Re-invented

As Netflix moved beyond DVDs, it no longer required members to create an ordered list of movies. Instead, members hit the “Play” button to begin watching a movie or TV show.

Netflix had a profile feature during the DVD era, but only 2% of members used the feature, mainly because managing this forced-rank list of movies was time-consuming. But now, there’s nothing to manage. You provide Netflix with your name, three movies, or TV shows you enjoy, and Netflix creates a personalized experience for each user associated with that account.

Today, more than half of Netflix accounts have multiple profiles. Netflix knows the movie tastes for 200 million accounts, translating into an understanding of the movie tastes of 500 million separate movie-watchers.

2013: “House of Cards” Original Content Launch

In 2007, during the DVD era, Netflix’s first original content effort, Red Envelope Studios, failed. Despite this failure, Netflix tried again during the streaming era.

Knowing that millions of members liked Kevin Spacey and The West Wing, Netflix made an initial $100M bet on House of Cards, which paid off. Over six seasons, Netflix invested more than $500 million in the series. House of Cards was the first of many successful original movies and television series.

By this time in Netflix’s history, it’s clear that personalization delights customers in hard-to-copy, margin-enhancing ways. By making movies easier to find, Netflix improves retention, which increases lifetime value (LTV). And Netflix’s personalization technology is tough to copy, especially at scale.

But there’s another aspect of personalization that improves the company’s margin: the ability for Netflix to “right-size” their content spend. Here are examples of Netflix’s right-sizing with my best estimates on content investments:

Based on its knowledge of member tastes, Netflix predicts that 100 million members will watch Stranger Things and invests $500 million in the series.

For a quirkier adult cartoon, Bojack Horseman, the data science team predicts 20 million watchers, so they invest $100 million in this animated TV series.

Based on a prediction that one million members will watch Everest climbing documentaries, Netflix invests $5 million in this genre.

Netflix has a huge advantage in its ability to right-size its original content investment, fueled by its ability to forecast how many members will watch a specific movie, documentary, or TV show. Note: Netflix does not bring data-driven approaches to the movie creation process—they are hands-off with creators.

2013: Netflix wins a Technical Emmy

In 2013, Netflix won an Emmy award for “Personalized Recommendation Engines For Video Discovery.” This award hints at the degree to which Netflix will eventually dominate the Oscars, Emmy’s, and Golden Globe Awards for its original content.

2015: Does It Matter if You’re French?

From 2015 to 2021, Netflix expanded from twenty to forty languages, as they launched their service into 190 countries. The personalization team wondered if they should inform the personalization algorithms with each member’s native language and country. The short answer, based on the results of A/B testing: “No.”

Like the demographics test in 2006, members’ tastes are so idiosyncratic that language and geography don’t help predict a member’s movie preferences. As before, the most efficient way to seed a member’s taste profile is to ask for a few TV shows or movies they love. Over time, Netflix builds from this “seed” as it informs its algorithms with the titles members rate, watch, stop watching, and even demonstrate interest by clicking on the “Movie Display Page” or watching a preview.

2016: Netflix Tests a Personalized Interface

Some of the cultural values of Netflix are curiosity, candor, and courage. Netflix encourages new employees to challenge conventional wisdom when they join the company. Netflix appreciates the value of “fresh eyes” and encourages an iconoclastic culture.

A newly hired product leader at Netflix suggested that the team test “floating rows.” The idea was that rows like “Top 10 for Gib,” “Just Released,” and “Continue Watching” should be different for each member and even change depending on the device, time of day, and other factors. Conventional wisdom suggested an inconsistent site design would confuse and annoy customers, so it’s better to keep the interface consistent.

As Ralph Waldon Emerson once said, “Consistency is the hobgoblin of small minds.” Surprisingly, the inconsistent interface performed better in AB tests. Today, even the user interface is personalized based on member’s taste preferences.

2017: From Stars to Thumbs

By 2017, Netflix collected more than 5 billion star ratings. But over the last ten years, Facebook popularized a different rating system — thumbs up and down. By 2017, Facebook had introduced this simple gesture to more than two billion users worldwide.

Discovering which method inspires members to provide more taste data is simple: execute an A/B test of the five-star system against a thumbs up/down system. The result: the simpler thumbs system collected twice as many ratings.

Was this a surprising result? No. When you require a member to parse between three, four, or five stars, you force them to think too much. They become confounded and move on to the next activity without rating a movie. Clicking thumbs up or down is much easier. Here, as in many cases with user interfaces, simple trumps complete.

2017: What Happens to The Five-Star System?

If stars are gone, how do you communicate movie quality? Recall that one of Netflix’s early hypotheses was that, over time, the average ratings of the movies watched would increase, leading to improved retention. While there was evidence that average ratings increased, nothing suggested that higher average ratings improved retention.

It turns out that movie ratings do not equal movie enjoyment. While you may appreciate that Schindler’s List or Hotel Rwanda are five-star movies, it doesn’t mean you enjoy them more than a three-star movie. Sometimes a “leave your brains at the door” comedy like Paul Blart’s Mall Cop is all you need. This is the reason that one of Netflix’s first big investments in original content was a six-picture deal with Adam Sandler, the king of sophomoric comedy. My favorite? The Ridiculous Six.

2017: Percentage Match

So, with the loss of star ratings, and the insight that star ratings do not equal movie enjoyment, Netflix changed its system. They switched to a “percentage match” that indicated how much you would enjoy a movie, irrespective of its quality.

Below, Netflix gives an “80% Match” for me for The Irishman. There’s an 80% chance I will enjoy it, which is at the low-end for Netflix suggestions for me.

2018: Personalized Movie Art

The Netflix personalization team wants to present you with the right title at the right time with as much context as possible to encourage you to watch that title. To do this, Netflix uses personalized visuals that cater to each member’s taste preferences. Here’s more from the Netflix tech blog:

Let us consider trying to personalize the image we use to depict the movie Good Will Hunting. Here we might personalize this decision based on how much a member prefers different genres and themes. Someone who has watched many romantic movies may be interested in Good Will Hunting if we show the artwork containing Matt Damon and Minnie Driver, whereas, a member who has watched many comedies might be drawn to the movie if we use the artwork containing Robin Williams, a well-known comedian.

Below are the titles that support each scenario, along with the unique movie art that Netflix presents to different members. The top row supports a member interested in romantic movies, with the image of Matt Damon and Minnie Driver as the hero. The bottom row is for members who enjoy watching well-known comedians. In that example, Robin Williams is featured.

Not only does Netflix use its knowledge of member taste to choose the right movie, but it takes member tastes into account to support these choices through highly personalized visuals.

2021: Do you feel lucky?

The ultimate personalization is you turn on your TV, and Netflix magically plays a movie you’ll love. Netflix’s first experiment with this concept is a feature that the company co-CEO, Reed Hastings (jokingly), called the “I feel lucky” button.

Think of this button as a proxy for how well the algorithms connect members with movies they’ll love. I’d guess that 2-3% of the plays come from this button today. If this “Play Something” button generates ten percent usage a few years from now, it’s a strong indication that Netflix personalization is doing a better job connecting its members with movies they’ll love.

Here’s the long-term personalization vision: twenty years from now, Netflix will eliminate both the “Play Something” button and its personalized merchandising system, and that one special movie you’re in the mood to watch at that particular moment will automatically begin to play.

I guess that Netflix will achieve this vision in twenty years. They’ve come a long way in the last twenty years, so this is entirely feasible.

Conclusion

Today, more than 80% of the TV shows and movies that Netflix members watch are merchandised by Netflix’s personalization algorithms.

Netflix’s personalization journey has had its share of ups and downs. Still, eventually, personalization enabled Netflix to build a hard-to-copy technological advantage that delights customers in margin-enhancing ways.

For product leaders engaged in innovative projects, Netflix’s journey highlights the need for:

a plan — a product strategy with accompanying metrics and tactics

a method to quickly test various hypotheses, and

a culture that encourages risk-taking, intellectual curiosity, candor, plus the courage required to say, “Let’s try this.”

I hope you enjoyed this essay. If you missed Part 1 of this “Brief History of Netflix Personalization,” two-part series, read the essay here.

One last thing!

Before you go, I’d love you to complete the Four Ss below:

1) Subscribe to “Ask Gib.” That way, you’ll never miss an essay and can become part of the growing “Ask Gib” community.

2) Share this essay with others! By doing this, we’ll collect more questions and upvotes to ensure more relevant essays:

3) Star this essay! Click the Heart icon near the top or bottom of this essay. (Yes, I know it’s a stretch to say “star” and not “heart,” but I need an “S” word.)

4) Survey it! It only takes one minute to complete the UserLeap survey for this essay, and your feedback helps me to make each essay better: Click here.

Many thanks,

Gib

PS. Got a question? Ask and upvote questions here:

PPS. Once I answer a question, I archive it. I have responded to 50 questions so far!

Thanks for this write up Gib! Aside from the improved recruiting and external impression of Netflix as a serious tech company, I'm curious how the teams within Netflix felt about the competitive culture both during and after the prize. Was it a culturally positive experience for employees? Did the bets come at the expense of cross-team collaboration?

Also interested in understanding how it influenced the org's working style from a more practical, day-to-day standpoint.